Review:

A Half-Built Garden, by Ruthanna Emrys

| Publisher: |

Tordotcom |

| Copyright: |

2022 |

| ISBN: |

1-250-21097-6 |

| Format: |

Kindle |

| Pages: |

340 |

The climate apocalypse has happened. Humans woke up to the danger, but a

little bit too late. Over one billion people died. But the world on the

other side of that apocalypse is not entirely grim. The corporations

responsible for so much of the damage have been pushed out of society and

isolated on their independent "aislands," traded with only grudgingly for

the few commodities the rest of the world has not yet learned how to

manufacture without them. Traditional governments have largely collapsed,

although they cling to increasingly irrelevant trappings of power. In

their place arose the watershed networks: a new way of living with both

nature and other humans, built around a mix of anarchic consensus and

direct democracy, with conservation and stewardship of the natural

environment at its core.

Therefore, when the aliens arrive near Bear Island on the Potomac River,

they're not detected by powerful telescopes and met by military jets.

Instead, their waste sets off water sensors, and they're met by the two

women on call for alert duty, carrying a nursing infant and backed by the

real-time discussion and consensus technology of the watershed's dandelion

network. (Emrys is far from the first person to name something a

"dandelion network," so be aware that the usage in this book seems

unrelated to the charities or blockchain network.)

This is a first contact novel, but it's one that skips over the typical

focus of the subgenre. The alien Ringers are completely fluent in English

down to subtle nuance of emotion and connotation (supposedly due to

observation of our radio and TV signals), have translation devices, and in

some cases can make our speech sounds directly. Despite significantly

different body shapes, they are immediately comprehensible; differences

are limited mostly to family structure, reproduction, and social norms.

This is

Star Trek first contact, not the type more typical of

written science fiction. That feels unrealistic, but it's also obviously

an authorial choice to jump directly to the part of the story that Emrys

wants to write.

The Ringers have come to save humanity. In their experience,

technological civilization is inherently incompatible with planets.

Technology will destroy the planet, and the planet will in turn destroy

the species unless they can escape. They have reached other worlds

multiple times before, only to discover that they were too late and

everyone is already dead. This is the first time they've arrived in time,

and they're eager to help humanity off its dying planet to join them in

the

Dyson sphere of

space habitats they are constructing. Planets, to them, are a nest and a

launching pad, something to eventually abandon and break down for spare

parts.

The small, unexpected wrinkle is that Judy, Carol, and the rest of their

watershed network are not interested in leaving Earth. They've finally

figured out the most critical pieces of environmental balance. Earth is

going to get hotter for a while, but the trend is slowing. What they're

doing is working. Humanity would benefit greatly from Ringer technology

and the expertise that comes from managing closed habitat ecosystems, but

they don't need rescuing.

This goes over about as well as a toddler saying that playing in the road

is perfectly safe.

This is a fantastic hook for a science fiction novel. It does exactly

what a great science fiction premise should do: takes current concerns

(environmentalism, space boosterism, the debatable primacy of humans as a

species, the appropriate role of space colonization, the tension between

hopefulness and doomcasting about climate change) and uses the freedom of

science fiction to twist them around and come at them from an entirely

different angle.

The design of the aliens is excellent for this purpose. The Ringers are

not one alien species; they are two, evolved on different planets in the

same system. The plains dwellers developed space flight first and went to

meet the tree dwellers, and while their relationship is not entirely

without hierarchy (the plains dwellers clearly lead on most matters), it's

extensively symbiotic. They now form mixed families of both species, and

have a rich cultural history of stories about first contact, interspecies

conflicts and cooperation, and all the perils and misunderstandings that

they successfully navigated. It makes their approach to humanity more

believable to know that they have done first contact before and are

building on a model. Their concern for humanity is credibly sincere. The

joining of two species was wildly successful for them and they truly want

to add a third.

The politics on the human side are satisfyingly complicated. The

watershed network may have made first contact, but the US government (in

the form of NASA) is close behind, attempting to lean on its widely

ignored formal power. The corporations are farther away and therefore

slower to arrive, but the alien visitors have a damaged ship and need

space to construct a subspace beacon and Asterion is happy to offer a site

on one of its New Zealand islands. The corporate representatives are

salivating at the chance to escape Earth and its environmental regulation

for uncontrolled space construction and a new market of trillions of

Ringers. NASA's attitude is more measured, but their representative is

easily persuaded that the true future of humanity is in space. The work

the watershed networks are doing is difficult, uncertain, and involves a

lot of sacrifice, particularly for corporate consumer lifestyles. With

such an attractive alien offer on the table, why stay and work so hard for

an uncertain future? Maybe the Ringers are right.

And then the dandelion networks that the watersheds use as the core of

their governance and decision-making system all crash.

The setup was great; I was completely invested. The execution was more

mixed. There are some things I really liked, some things that I thought

were a bit too easy or predictable, and several places where I wish Emrys

had dug deeper and provided more detail. I thought the last third of the

book fizzled a little, although some of the secondary characters Emrys

introduces are delightful and carry the momentum of the story when the

politics feel a bit lacking.

If you tried to form a mental image of ecofeminist political science

fiction with 1970s utopian sensibilities, but updated for the concerns of

the 2020s, you would probably come very close to the politics of the

watershed networks. There are considerably more breastfeedings and diaper

changes than the average SF novel. Two of the primary characters are

transgender, but with very different experiences with transition. Pronoun

pins are an ubiquitous article of clothing. One of the characters has a

prosthetic limb. Another character who becomes important later in the

story codes as autistic. None of this felt gratuitous; the characters do

come across as obsessed with gender, but in a way that I found believable.

The human diversity is well-integrated with the story, shapes the

characters, creates practical challenges, and has subtle (and sometimes

not so subtle) political ramifications.

But, and I say this with love because while these are not quite my people

they're closely adjacent to my people, the social politics of this book

are a very specific type of white feminist collaborative utopianism. When

religion makes an appearance, I was completely unsurprised to find that

several of the characters are Jewish. Race never makes a significant

appearance at all. It's the sort of book where the throw-away references

to other important watershed networks includes African ones, and the

characters would doubtless try to be sensitive to racial issues if they

came up, but somehow they never do. (If you're wondering if there's

polyamory in this book, yes, yes there is, and also I suspect you know

exactly what culture I'm talking about.)

This is not intended as a criticism, just more of a calibration. All

science fiction publishing houses could focus only on this specific

political perspective for a year and the results would still be dwarfed by

the towering accumulated pile of thoughtless paeans to capitalism.

Ecofeminism has a long history in the genre but still doesn't show up in

that many books, and we're far from exhausting the space of possibilities

for what a consensus-based politics could look like with extensive

computer support. But this book has a highly specific point of view,

enough so that there won't be many thought-provoking surprises if you're

already familiar with this school of political thought.

The politics are also very earnest in a way that I admit provoked a bit of

eyerolling. Emrys pushes all of the political conflict into the contrasts

between the human factions, but I would have liked more internal

disagreement within the watershed networks over principles rather than

tactics. The degree of ideological agreement within the watershed group

felt a bit unrealistic. But, that said, at least politics truly matters

and the characters wrestle directly with some tricky questions. I would

have liked to see more specifics about the dandelion network and the exact

mechanics of the consensus decision process, since that sort of thing is

my jam, but we at least get more details than are typical in science

fiction. I'll take this over

cynical

libertarianism any day.

Gender plays a huge role in this story, enough so that you should avoid

this book if you're not interested in exploring gender conceptions. One

of the two alien races is matriarchal and places immense social value on

motherhood, and it's culturally expected to bring your children with you

for any important negotiation. The watersheds actively embrace this, or

at worst find it comfortable to use for their advantage, despite a few

hints that the matriarchy of the plains aliens may have a very serious

long-term demographic problem. In an interesting twist, it's the

mostly-evil corporations that truly challenge gender roles, albeit by

turning it into an opportunity to sell more clothing.

The Asterion corporate representatives are, as expected, mostly the

villains of the plot: flashy, hierarchical, consumerist, greedy, and

exploitative. But gender among the corporations is purely a matter of

public performance, one of a set of roles that you can put on and off as

you choose and signal with clothing. They mostly use neopronouns, change

pronouns as frequently as their clothing, and treat any question of body

plumbing as intensely private. By comparison, the very 2020 attitudes of

the watersheds towards gender felt oddly conservative and essentialist,

and the main characters get flustered and annoyed by the ever-fluid

corporate gender presentation. I wish Emrys had done more with this.

As you can tell, I have a lot of thoughts and a lot of quibbles. Another

example: computer security plays an important role in the plot and was

sufficiently well-described that I have serious questions about the system

architecture and security model of the dandelion networks. But, as with

decision-making and gender, the more important takeaway is that Emrys

takes enough risks and describes enough interesting ideas that there's a

lot of meat here to argue with. That, more than getting everything right,

is what a good science fiction novel should do.

A Half-Built Garden is written from a very specific political

stance that may make it a bit predictable or off-putting, and I thought

the tail end of the book had some plot and resolution problems, but

arguing with it was one of the more intellectually satisfying science

fiction reading experiences I've had recently. You have to be in the

right mood, but recommended for when you are.

Rating: 7 out of 10

But I m drifting from the topic/movie.

But I m drifting from the topic/movie.

Like each month, have a look at the work funded by Freexian s Debian LTS offering.

Like each month, have a look at the work funded by Freexian s Debian LTS offering.

I m calling time on DNSSEC. Last week, prompted by a change in my DNS hosting setup, I began removing it from the few personal zones I had signed. Then this Monday the .nz ccTLD experienced a multi-day availability incident triggered by the annual DNSSEC key rotation process. This incident broke several of my unsigned zones, which led me to say very unkind things about DNSSEC on Mastodon and now I feel compelled to more completely explain my thinking:

For almost all domains and use-cases, the costs and risks of deploying DNSSEC outweigh the benefits it provides. Don t bother signing your zones.

The .nz incident, while topical, is not the motivation or the trigger for this conclusion. Had it been a novel incident, it would still have been annoying, but novel incidents are how we learn so I have a small tolerance for them. The problem with DNSSEC is precisely that this incident was not novel, just the latest in a long and growing list.

It s a clear pattern. DNSSEC is complex and risky to deploy. Choosing to sign your zone will almost inevitably mean that you will experience lower availability for your domain over time than if you leave it unsigned. Even if you have a team of DNS experts maintaining your zone and DNS infrastructure, the risk of routine operational tasks triggering a loss of availability (unrelated to any attempted attacks that DNSSEC may thwart) is very high - almost guaranteed to occur. Worse, because of the nature of DNS and DNSSEC these incidents will tend to be prolonged and out of your control to remediate in a timely fashion.

The only benefit you get in return for accepting this almost certain reduction in availability is trust in the integrity of the DNS data a subset of your users (those who validate DNSSEC) receive. Trusted DNS data that is then used to communicate across an untrusted network layer. An untrusted network layer which you are almost certainly protecting with TLS which provides a more comprehensive and trustworthy set of security guarantees than DNSSEC is capable of, and provides those guarantees to all your users regardless of whether they are validating DNSSEC or not.

In summary, in our modern world where TLS is ubiquitous, DNSSEC provides only a thin layer of redundant protection on top of the comprehensive guarantees provided by TLS, but adds significant operational complexity, cost and a high likelihood of lowered availability.

In an ideal world, where the deployment cost of DNSSEC and the risk of DNSSEC-induced outages were both low, it would absolutely be desirable to have that redundancy in our layers of protection. In the real world, given the DNSSEC protocol we have today, the choice to avoid its complexity and rely on TLS alone is not at all painful or risky to make as the operator of an online service. In fact, it s the prudent choice that will result in better overall security outcomes for your users.

Ignore DNSSEC and invest the time and resources you would have spent deploying it improving your TLS key and certificate management.

Ironically, the one use-case where I think a valid counter-argument for this position can be made is TLDs (including ccTLDs such as .nz). Despite its many failings, DNSSEC is an Internet Standard, and as infrastructure providers, TLDs have an obligation to enable its use. Unfortunately this means that everyone has to bear the costs, complexities and availability risks that DNSSEC burdens these operators with. We can t avoid that fact, but we can avoid creating further costs, complexities and risks by choosing not to deploy DNSSEC on the rest of our non-TLD zones.

I m calling time on DNSSEC. Last week, prompted by a change in my DNS hosting setup, I began removing it from the few personal zones I had signed. Then this Monday the .nz ccTLD experienced a multi-day availability incident triggered by the annual DNSSEC key rotation process. This incident broke several of my unsigned zones, which led me to say very unkind things about DNSSEC on Mastodon and now I feel compelled to more completely explain my thinking:

For almost all domains and use-cases, the costs and risks of deploying DNSSEC outweigh the benefits it provides. Don t bother signing your zones.

The .nz incident, while topical, is not the motivation or the trigger for this conclusion. Had it been a novel incident, it would still have been annoying, but novel incidents are how we learn so I have a small tolerance for them. The problem with DNSSEC is precisely that this incident was not novel, just the latest in a long and growing list.

It s a clear pattern. DNSSEC is complex and risky to deploy. Choosing to sign your zone will almost inevitably mean that you will experience lower availability for your domain over time than if you leave it unsigned. Even if you have a team of DNS experts maintaining your zone and DNS infrastructure, the risk of routine operational tasks triggering a loss of availability (unrelated to any attempted attacks that DNSSEC may thwart) is very high - almost guaranteed to occur. Worse, because of the nature of DNS and DNSSEC these incidents will tend to be prolonged and out of your control to remediate in a timely fashion.

The only benefit you get in return for accepting this almost certain reduction in availability is trust in the integrity of the DNS data a subset of your users (those who validate DNSSEC) receive. Trusted DNS data that is then used to communicate across an untrusted network layer. An untrusted network layer which you are almost certainly protecting with TLS which provides a more comprehensive and trustworthy set of security guarantees than DNSSEC is capable of, and provides those guarantees to all your users regardless of whether they are validating DNSSEC or not.

In summary, in our modern world where TLS is ubiquitous, DNSSEC provides only a thin layer of redundant protection on top of the comprehensive guarantees provided by TLS, but adds significant operational complexity, cost and a high likelihood of lowered availability.

In an ideal world, where the deployment cost of DNSSEC and the risk of DNSSEC-induced outages were both low, it would absolutely be desirable to have that redundancy in our layers of protection. In the real world, given the DNSSEC protocol we have today, the choice to avoid its complexity and rely on TLS alone is not at all painful or risky to make as the operator of an online service. In fact, it s the prudent choice that will result in better overall security outcomes for your users.

Ignore DNSSEC and invest the time and resources you would have spent deploying it improving your TLS key and certificate management.

Ironically, the one use-case where I think a valid counter-argument for this position can be made is TLDs (including ccTLDs such as .nz). Despite its many failings, DNSSEC is an Internet Standard, and as infrastructure providers, TLDs have an obligation to enable its use. Unfortunately this means that everyone has to bear the costs, complexities and availability risks that DNSSEC burdens these operators with. We can t avoid that fact, but we can avoid creating further costs, complexities and risks by choosing not to deploy DNSSEC on the rest of our non-TLD zones.

It s been a very long time I haven t blogged about e-voting, although

some might remember it s been a topic I have long worked with;

particularly, it was the topic of my 2018 Masters

thesis, plus some five articles I

wrote in the 2010-2018 period. After the thesis, I have to admit I got

weary of the subject, and haven t pursued it anymore.

So, I was saddened and dismayed to read that once again, as it has

already happened the electoral authorities would set up a pilot

e-voting program in the local elections this year, that would probably

lead to a wider deployment next year, in the Federal elections.

It s been a very long time I haven t blogged about e-voting, although

some might remember it s been a topic I have long worked with;

particularly, it was the topic of my 2018 Masters

thesis, plus some five articles I

wrote in the 2010-2018 period. After the thesis, I have to admit I got

weary of the subject, and haven t pursued it anymore.

So, I was saddened and dismayed to read that once again, as it has

already happened the electoral authorities would set up a pilot

e-voting program in the local elections this year, that would probably

lead to a wider deployment next year, in the Federal elections.

This year ( this week!), two States will have elections for their

Governors and local Legislative branches: Coahuila (North, bordering

with Texas) and Mexico (Center, surrounding Mexico City). They are

very different states, demographically and in their development

level.

Pilot programs with e-voting booths have been seen in four states

TTBOMK in the last ~15 years: Jalisco (West), Mexico City, State of

Mexico and Coahuila. In Coahuila, several universities have teamed up

with the Electoral Institute to develop their e-voting booth; a good

thing that I can say about how this has been done in my country is

that, at least, the Electoral Institute is providing their own

implementations, instead of sourcing with e-booth vendors (which have

their long, tragic story mostly in the USA, but also in other

places). Not only that: They are subjecting the machines to audit

processes. Not open audit processes, as demanded by academics in the

field, but nevertheless, external, rigorous audit processes.

But still, what me and other colleagues with Computer Security

background oppose to is not a specific e-voting implementation, but

the adoption of e-voting in general. If for nothing else, because of

the extra complexity it brings, because of the many more checks that

have to be put in place, and Because as programmers, we are aware

of the ease with which bugs can creep in any given

implementation both honest bugs (mistakes) and, much worse, bugs

that are secretly requested and paid for.

Anyway, leave this bit aside for a while. I m not implying there

was any ill intent in the design or implementation of these e-voting

booths.

Two days ago, the Electoral Institute announced there was an important

bug found in the Coahuila implementation. The bug consists, as far as

I can understand from the information reported in newspapers, in:

This year ( this week!), two States will have elections for their

Governors and local Legislative branches: Coahuila (North, bordering

with Texas) and Mexico (Center, surrounding Mexico City). They are

very different states, demographically and in their development

level.

Pilot programs with e-voting booths have been seen in four states

TTBOMK in the last ~15 years: Jalisco (West), Mexico City, State of

Mexico and Coahuila. In Coahuila, several universities have teamed up

with the Electoral Institute to develop their e-voting booth; a good

thing that I can say about how this has been done in my country is

that, at least, the Electoral Institute is providing their own

implementations, instead of sourcing with e-booth vendors (which have

their long, tragic story mostly in the USA, but also in other

places). Not only that: They are subjecting the machines to audit

processes. Not open audit processes, as demanded by academics in the

field, but nevertheless, external, rigorous audit processes.

But still, what me and other colleagues with Computer Security

background oppose to is not a specific e-voting implementation, but

the adoption of e-voting in general. If for nothing else, because of

the extra complexity it brings, because of the many more checks that

have to be put in place, and Because as programmers, we are aware

of the ease with which bugs can creep in any given

implementation both honest bugs (mistakes) and, much worse, bugs

that are secretly requested and paid for.

Anyway, leave this bit aside for a while. I m not implying there

was any ill intent in the design or implementation of these e-voting

booths.

Two days ago, the Electoral Institute announced there was an important

bug found in the Coahuila implementation. The bug consists, as far as

I can understand from the information reported in newspapers, in:

Some time ago, on an early Friday afternoon our internet connection died. After a reasonable time had passed we called the customer service, they told us that they would look into it and then call us back.

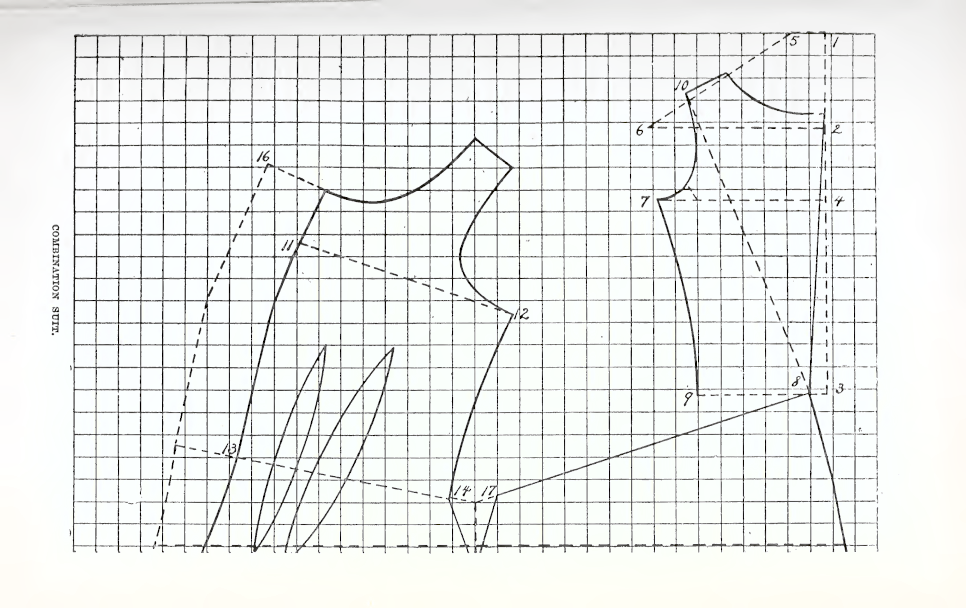

On Friday evening we had not heard from them, and I was starting to get worried. At the time in the evening when I would have been relaxing online I grabbed the first Victorian sewing-related book I found on my hard disk and started to read it.

For the record, it wasn t actually Victorian, it was Margaret J. Blair. System of Sewing and Garment Drafting. from 1904, but I also had available for comparison the earlier and smaller Margaret Blair. System of Garment Drafting. from 1897.

Some time ago, on an early Friday afternoon our internet connection died. After a reasonable time had passed we called the customer service, they told us that they would look into it and then call us back.

On Friday evening we had not heard from them, and I was starting to get worried. At the time in the evening when I would have been relaxing online I grabbed the first Victorian sewing-related book I found on my hard disk and started to read it.

For the record, it wasn t actually Victorian, it was Margaret J. Blair. System of Sewing and Garment Drafting. from 1904, but I also had available for comparison the earlier and smaller Margaret Blair. System of Garment Drafting. from 1897.

Anyway, this book had a system to draft a pair of combinations (chemise top + drawers); and months ago I had already tried to draft a pair from another system, but they didn t really fit and they were dropped low on the priority list, so on a whim I decided to try and draft them again with this new-to-me system.

Around 23:00 in the night the pattern was ready, and I realized that my SO had gone to sleep without waiting for me, as I looked too busy to be interrupted.

The next few days were quite stressful (we didn t get our internet back until Wednesday) and while I couldn t work at my day job I didn t sew as much as I could have done, but by the end of the week I had an almost complete mockup from an old sheet, and could see that it wasn t great, but it was a good start.

One reason why the mockup took a whole week is that of course I started to sew by machine, but then I wanted flat-felled seams, and felling them by hand is so much neater, isn t it?

And let me just say, I m grateful for the fact that I don t depend on streaming services for media, but I have a healthy mix of DVDs and stuff I had already temporary downloaded to watch later, because handsewing and being stressed out without watching something is not really great.

Anyway, the mockup was a bit short on the crotch, but by the time I could try it on and be sure I was invested enough in it that I decided to work around the issue by inserting a strip of lace around the waist.

And then I went back to the pattern to fix it properly, and found out that I had drafted the back of the drawers completely wrong, making a seam shorter rather than longer as it should have been. ooops.

I fixed the pattern, and then decided that YOLO and cut the new version directly on some lightweight linen fabric I had originally planned to use in this project.

The result is still not perfect, but good enough, and I finished it with a very restrained amount of lace at the neckline and hems, wore it one day when the weather was warm (loved the linen on the skin) and it s ready to be worn again when the weather will be back to being warm (hopefully not too soon).

The last problem was taking pictures of this underwear in a way that preserves the decency (and it even had to be outdoors, for the light!).

This was solved by wearing leggings and a matched long sleeved shirt under the combinations, and then promptly forgetting everything about decency and, well, you can see what happened.

Anyway, this book had a system to draft a pair of combinations (chemise top + drawers); and months ago I had already tried to draft a pair from another system, but they didn t really fit and they were dropped low on the priority list, so on a whim I decided to try and draft them again with this new-to-me system.

Around 23:00 in the night the pattern was ready, and I realized that my SO had gone to sleep without waiting for me, as I looked too busy to be interrupted.

The next few days were quite stressful (we didn t get our internet back until Wednesday) and while I couldn t work at my day job I didn t sew as much as I could have done, but by the end of the week I had an almost complete mockup from an old sheet, and could see that it wasn t great, but it was a good start.

One reason why the mockup took a whole week is that of course I started to sew by machine, but then I wanted flat-felled seams, and felling them by hand is so much neater, isn t it?

And let me just say, I m grateful for the fact that I don t depend on streaming services for media, but I have a healthy mix of DVDs and stuff I had already temporary downloaded to watch later, because handsewing and being stressed out without watching something is not really great.

Anyway, the mockup was a bit short on the crotch, but by the time I could try it on and be sure I was invested enough in it that I decided to work around the issue by inserting a strip of lace around the waist.

And then I went back to the pattern to fix it properly, and found out that I had drafted the back of the drawers completely wrong, making a seam shorter rather than longer as it should have been. ooops.

I fixed the pattern, and then decided that YOLO and cut the new version directly on some lightweight linen fabric I had originally planned to use in this project.

The result is still not perfect, but good enough, and I finished it with a very restrained amount of lace at the neckline and hems, wore it one day when the weather was warm (loved the linen on the skin) and it s ready to be worn again when the weather will be back to being warm (hopefully not too soon).

The last problem was taking pictures of this underwear in a way that preserves the decency (and it even had to be outdoors, for the light!).

This was solved by wearing leggings and a matched long sleeved shirt under the combinations, and then promptly forgetting everything about decency and, well, you can see what happened.

The pattern is, as usual, published on my pattern website as #FreeSoftWear.

And then, I started thinking about knits.

In the late Victorian and Edwardian eras knit underwear was a thing, also thanks to the influence of various aspects of the rational dress movement; reformers such as Gustav J ger advocated for wool underwear, but mail order catalogues from the era such as https://archive.org/details/cataloguefallwin00macy (starting from page 67) have listings for both cotton and wool ones.

From what I could find, back then they would have been either handknit at home or made to shape on industrial knitting machines; patterns for the former are available online, but the latter would probably require a knitting machine that I don t currently1 have.

However, this is underwear that is not going to be seen by anybody2, and I believe that by using flat knit fabric one can get a decent functional approximation.

In The Stash I have a few meters of a worked cotton jersey with a pretty comfy feel, and to make a long story short: this happened.

The pattern is, as usual, published on my pattern website as #FreeSoftWear.

And then, I started thinking about knits.

In the late Victorian and Edwardian eras knit underwear was a thing, also thanks to the influence of various aspects of the rational dress movement; reformers such as Gustav J ger advocated for wool underwear, but mail order catalogues from the era such as https://archive.org/details/cataloguefallwin00macy (starting from page 67) have listings for both cotton and wool ones.

From what I could find, back then they would have been either handknit at home or made to shape on industrial knitting machines; patterns for the former are available online, but the latter would probably require a knitting machine that I don t currently1 have.

However, this is underwear that is not going to be seen by anybody2, and I believe that by using flat knit fabric one can get a decent functional approximation.

In The Stash I have a few meters of a worked cotton jersey with a pretty comfy feel, and to make a long story short: this happened.

I suspect that the linen one will get worn a lot this summer (linen on the skin. nothing else need to be said), while the cotton one will be stored away for winter. And then maybe I may make a couple more, if I find out that I m using it enough.

I suspect that the linen one will get worn a lot this summer (linen on the skin. nothing else need to be said), while the cotton one will be stored away for winter. And then maybe I may make a couple more, if I find out that I m using it enough.

I've been watching the neovim community for a while and what seems like a

cambrian explosion of plugins emerging. A few weeks back I decided to spend

most of a "day of learning" on investigating some of the plugins and

technologies that I'd read about: Language Server

Protocol,

TreeSitter,

neorg (a grandiose organiser plugin),

etc.

It didn't go so well. I spent most of my time fighting version

incompatibilities or tracing through scant documentation or code to figure out

what plugin was incompatible with which other.

There's definitely a line where crossing it is spending too much time playing

with your tools instead of creating. On the other hand, there's definitely

value in honing your tools and learning about new technologies. Everyone's line

is probably in a different place. I've come to the conclusion that I don't have

the time or inclination (or both) to approach exploring the neovim universe in

this way. There exist a number of plugin "distributions" (such as LunarVim): collections of pre-

configured and integrated plugins that you can try to use out-of-the-box. Next

time I think I'll pick one up and give that a try &emdash independently

from my existing configuration &emdash and see which ideas from it I might like to

adopt.

shared vimrcs

Some folks upload their vim or neovim configurations in their entirety for

others to see. I noticed Jess Frazelle had published

hers so I took a look. I suppose one could

evaluate a bunch of plugins and configuration in isolation using a shared vimrc

like this, in the same was as a distribution.

bufferline

Amongst the plugins she uses was bufferline, a plugin to re-work neovim's

tab bar to behave like tab bars from more conventional editors1. I don't make

use of neovim's tabs at all2, so I would lose nothing having the (presently hidden)

tab bar reworked, so I thought I'd give it a go.

I had to disable an existing plugin lightline, which I've had enabled for years

but I wasn't sure I was getting much value from. Apparently it also messes with the

tab bar! Disabling it, at least for now, at least means I'll find out if I miss it.

I am already using

vim-buffergator as a means

of seeing and managing open buffers: a hotkey opens a sidebar with a list of

open buffers, to switch between or close. Bufferline gives me a more immediate,

always-present view of open buffers, which is faintly useful: but not much.

Perhaps I'd like it more if I was coming from an editor that had made it more

of an expected feature. The two things I noticed about it that aren't

especially useful for me: when browsing around vimwiki pages, I quickly

open a lot of buffers. The horizontal line fills up very quickly. Even when I

don't, I habitually have quite a lot of buffers open, and the horizontal line

is quickly overwhelmed.

I have found myself closing open buffers with the mouse, which I didn't do

before.

vert

Since I have brought up a neovim UI feature (tabs) I thought I'd briefly mention

my new favourite neovim built-in command:

I've been watching the neovim community for a while and what seems like a

cambrian explosion of plugins emerging. A few weeks back I decided to spend

most of a "day of learning" on investigating some of the plugins and

technologies that I'd read about: Language Server

Protocol,

TreeSitter,

neorg (a grandiose organiser plugin),

etc.

It didn't go so well. I spent most of my time fighting version

incompatibilities or tracing through scant documentation or code to figure out

what plugin was incompatible with which other.

There's definitely a line where crossing it is spending too much time playing

with your tools instead of creating. On the other hand, there's definitely

value in honing your tools and learning about new technologies. Everyone's line

is probably in a different place. I've come to the conclusion that I don't have

the time or inclination (or both) to approach exploring the neovim universe in

this way. There exist a number of plugin "distributions" (such as LunarVim): collections of pre-

configured and integrated plugins that you can try to use out-of-the-box. Next

time I think I'll pick one up and give that a try &emdash independently

from my existing configuration &emdash and see which ideas from it I might like to

adopt.

shared vimrcs

Some folks upload their vim or neovim configurations in their entirety for

others to see. I noticed Jess Frazelle had published

hers so I took a look. I suppose one could

evaluate a bunch of plugins and configuration in isolation using a shared vimrc

like this, in the same was as a distribution.

bufferline

Amongst the plugins she uses was bufferline, a plugin to re-work neovim's

tab bar to behave like tab bars from more conventional editors1. I don't make

use of neovim's tabs at all2, so I would lose nothing having the (presently hidden)

tab bar reworked, so I thought I'd give it a go.

I had to disable an existing plugin lightline, which I've had enabled for years

but I wasn't sure I was getting much value from. Apparently it also messes with the

tab bar! Disabling it, at least for now, at least means I'll find out if I miss it.

I am already using

vim-buffergator as a means

of seeing and managing open buffers: a hotkey opens a sidebar with a list of

open buffers, to switch between or close. Bufferline gives me a more immediate,

always-present view of open buffers, which is faintly useful: but not much.

Perhaps I'd like it more if I was coming from an editor that had made it more

of an expected feature. The two things I noticed about it that aren't

especially useful for me: when browsing around vimwiki pages, I quickly

open a lot of buffers. The horizontal line fills up very quickly. Even when I

don't, I habitually have quite a lot of buffers open, and the horizontal line

is quickly overwhelmed.

I have found myself closing open buffers with the mouse, which I didn't do

before.

vert

Since I have brought up a neovim UI feature (tabs) I thought I'd briefly mention

my new favourite neovim built-in command:

Like each month, have a look at the work funded by Freexian s Debian LTS offering.

Like each month, have a look at the work funded by Freexian s Debian LTS offering.